And here’s what you need to initially know about it.

The European Union published the first-of-its-kind Artificial Intelligence Act (AI Act) on 12 July 2024, codifying a widespread regulatory mandate for artificial intelligence development and use. The EU Parliament passed the AI Act with the goal of incentivizing—and requiring— “safe, transparent, traceable, non-discriminatory, and environmentally friendly” artificial intelligence systems.1 Quite significantly, the Act implicates not only AI providers located within the EU, but also any provider outputting AI that is used in the EU.

Artificial intelligence is unquestionably one of the most dominant trends in the modern economy, touching essentially every industry and most digital interactions. The rapid development and ubiquitous availability of AI has outpaced government regulation. Developers, businesses, academic institutions, and individual end-users have, to this date, proceeded largely unhindered by strict legal regulations. The European Union—sensing the potential harms and risks of unregulated AI—presented a plan for implementing guiderails and safeguards to this unchartered territory that may impact companies with AI software and/or products that are used in the EU.

Key Provisions

The AI Act defines an AI system as:

a machine-based system that is designed to operate with varying levels of autonomy and that may exhibit adaptiveness after deployment, and that, for explicit or implicit objectives, infers, from the input it receives, how to generate outputs such as predictions, content, recommendations, or decisions that can influence physical or virtual environments.2

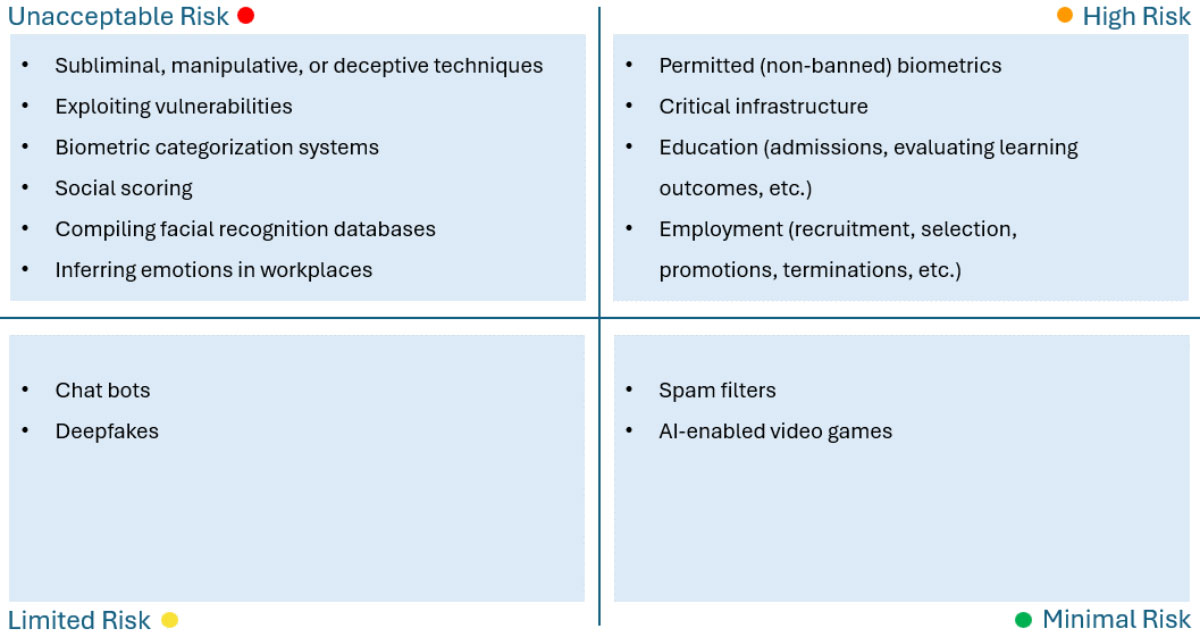

The substantive crux of the Act classifies AI systems into one of four categories based on ‘risk’: Unacceptable Risk, High Risk, Limited Risk, and Minimal Risk. Rules, regulations, and general permissibility vary by category.

Unacceptable Risk AI systems are outright prohibited by the Act.

High Risk AI systems require developers and providers to establish a risk management system, conduct data governance, draw up technical documentation, design systems for record-keeping, and more. For a comprehensive list of all requirements for High Risk AI developers and providers, please consult the Act or contact one of our attorneys. Users of High Risk AI systems (those who use or deploy High Risk AI systems in a professional capacity) are also subject to certain requirements.

Limited Risk AI systems are subject to lighter transparency requirements. Providers and developers of Limited Risk AI systems must inform end users that they are interacting with artificial intelligence.

Minimal Risk AI systems remain unregulated. These systems are narrowly defined by the Act and thus encompass only a few specific uses of artificial intelligence. The requirements for this risk category are subject to change.

General-Purpose AI Models

In addition to the risk-based categories, the EU carves out specific obligations for what it terms “general-purpose AI models.”

Chapter 5 of the Act defines general-purpose AI (GPAI) models in relevant part by the “high impact capabilities” of such models.3 An assessment of a models high impact capabilities is both discretionary – based on a decision of the Commission or a delegated scientific panel – and objective: a model is presumed to be high-impact based when the “cumulative amount of computation used for its training” meets a certain threshold.4 Providers of these GPAI models are obligated under the Act to maintain documentation of the model, including a general description, training methods, and an evaluation of results.5

Implications for Your Business

Step 1: Identify how, if at all, the Act applies to your business.

- Are you located in the EU, or is your AI output used in the EU?

- What risk category does your AI fall into?

- Are you a developer/provider, or a user?

Step 2: Conduct a thorough in-house AI inventory.

- What systems, divisions, or processes are driven by AI?

- How and where does your business consolidate, organize, and maintain information and metadata pertaining to each of these systems?

Step 3: Assess your current compliance.

- Do your AI systems and outputs comply with the specified requirements?

- Where are the gaps in compliance?

Step 4: Plan and implement measures for compliance.

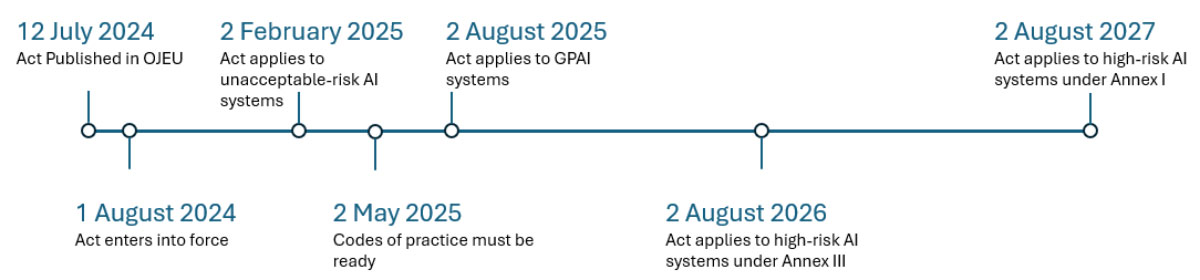

- When does each system need to be compliant (see Timeline below)?

- What changes, processes, or documents are needed for compliance?

- How will you continually monitor compliance with both current and future AI systems?

Timeline

The Act outlines a piecewise implementation timeline with most major milestones occurring within the next three years.

Questions about the Act or how to prepare your business?

Reach out to our AI Practice Group that are well versed in AI systems as we are here to help.

1 EU AI Act: first regulation on artificial intelligence. European Parliament, Directorate General for Communication. 18 June 2024.

2 See Article 3(1) of the Act.

3 See Article 51(1)(a) of the Act.

4 See Article 51(2) of the Act.

5 See Article 53 and Annex XI of the Act.